AI that lights up the moon, improvises grammar and teaches robots to stroll like people • TechCrunch

[ad_1]

Analysis within the discipline of machine studying and AI, now a key expertise in virtually each trade and firm, is much too voluminous for anybody to learn all of it. This column, Perceptron, goals to gather a few of the most related latest discoveries and papers — significantly in, however not restricted to, synthetic intelligence — and clarify why they matter.

Over the previous few weeks, scientists developed an algorithm to uncover fascinating particulars in regards to the moon’s dimly lit — and in some circumstances pitch-black — asteroid craters. Elsewhere, MIT researchers educated an AI mannequin on textbooks to see whether or not it may independently work out the foundations of a particular language. And groups at DeepMind and Microsoft investigated whether or not movement seize knowledge could possibly be used to show robots find out how to carry out particular duties, like strolling.

With the pending (and predictably delayed) launch of Artemis I, lunar science is once more within the highlight. Paradoxically, nevertheless, it’s the darkest areas of the moon which might be doubtlessly probably the most attention-grabbing, since they might home water ice that can be utilized for numerous functions. It’s simple to identify the darkness, however what’s in there? A global group of picture specialists has utilized ML to the issue with some success.

Although the craters lie in deepest darkness, the Lunar Reconnaissance Orbiter nonetheless captures the occasional photon from inside, and the group put collectively years of those underexposed (however not completely black) exposures with a “physics-based, deep learning-driven post-processing instrument” described in Geophysical Research Letters. The result’s that “seen routes into the completely shadowed areas can now be designed, tremendously lowering dangers to Artemis astronauts and robotic explorers,” according to David Kring of the Lunar and Planetary institute.

Let there be mild! The inside of the crater is reconstructed from stray photons. Picture Credit: V. T. Bickel, B. Moseley, E. Hauber, M. Shirley, J.-P. Williams and D. A. Kring

They’ll have flashlights, we think about, however it’s good to have a basic concept of the place to go beforehand, and naturally it may have an effect on the place robotic exploration or landers focus their efforts.

Nonetheless helpful, there’s nothing mysterious about turning sparse knowledge into a picture. However on this planet of linguistics, AI is making fascinating inroads into how and whether or not language fashions actually know what they know. Within the case of studying a language’s grammar, an MIT experiment discovered {that a} mannequin educated on a number of textbooks was capable of construct its personal mannequin of how a given language labored, to the purpose the place its grammar for Polish, say, may efficiently reply textbook issues about it.

“Linguists have thought that so as to actually perceive the foundations of a human language, to empathize with what it’s that makes the system tick, you need to be human. We wished to see if we will emulate the sorts of data and reasoning that people (linguists) convey to the duty,” said MIT’s Adam Albright in a news release. It’s very early analysis on this entrance however promising in that it reveals that delicate or hidden guidelines will be “understood” by AI fashions with out express instruction in them.

However the experiment didn’t straight handle a key, open query in AI analysis: find out how to forestall language fashions from outputting poisonous, discriminatory or deceptive language. New work out of DeepMind does sort out this, taking a philosophical strategy to the issue of aligning language fashions with human values.

Researchers on the lab posit that there’s no “one-size-fits-all” path to higher language fashions, as a result of the fashions must embody completely different traits relying on the contexts through which they’re deployed. For instance, a mannequin designed to help in scientific examine would ideally solely make true statements, whereas an agent taking part in the position of a moderator in a public debate would train values like toleration, civility and respect.

So how can these values be instilled in a language mannequin? The DeepMind co-authors don’t recommend one particular approach. As a substitute, they suggest fashions can domesticate extra “strong” and “respectful” conversations over time by way of processes they name context development and elucidation. Because the co-authors clarify: “Even when an individual just isn’t conscious of the values that govern a given conversational apply, the agent should still assist the human perceive these values by prefiguring them in dialog, making the course of communication deeper and extra fruitful for the human speaker.”

Google’s LaMDA language mannequin responding to a query. Picture Credit: Google

Sussing out probably the most promising strategies to align language fashions takes immense time and sources — monetary and in any other case. However in domains past language, significantly scientific domains, that may not be the case for for much longer, because of a $3.5 million grant from the Nationwide Science Basis (NSF) awarded to a group of scientists from the College of Chicago, Argonne Nationwide Laboratory and MIT.

With the NSF grant, the recipients plan to construct what they describe as “mannequin gardens,” or repositories of AI fashions designed to resolve issues in areas like physics, arithmetic and chemistry. The repositories will hyperlink the fashions with knowledge and computing sources in addition to automated exams and screens to validate their accuracy, ideally making it less complicated for scientific researchers to check and deploy the instruments in their very own research.

“A person can come to the [model] backyard and see all that info at a look,” Ben Blaiszik, an information science researcher at Globus Labs concerned with the venture, said in a press launch. “They will cite the mannequin, they’ll be taught in regards to the mannequin, they’ll contact the authors, they usually can invoke the mannequin themselves in an internet atmosphere on management computing amenities or on their very own pc.”

In the meantime, over within the robotics area, researchers are constructing a platform for AI fashions not with software program, however with {hardware} — neuromorphic {hardware} to be actual. Intel claims the most recent era of its experimental Loihi chip can allow an object recognition mannequin to “be taught” to determine an object it’s by no means seen earlier than utilizing as much as 175 instances much less energy than if the mannequin had been operating on a CPU.

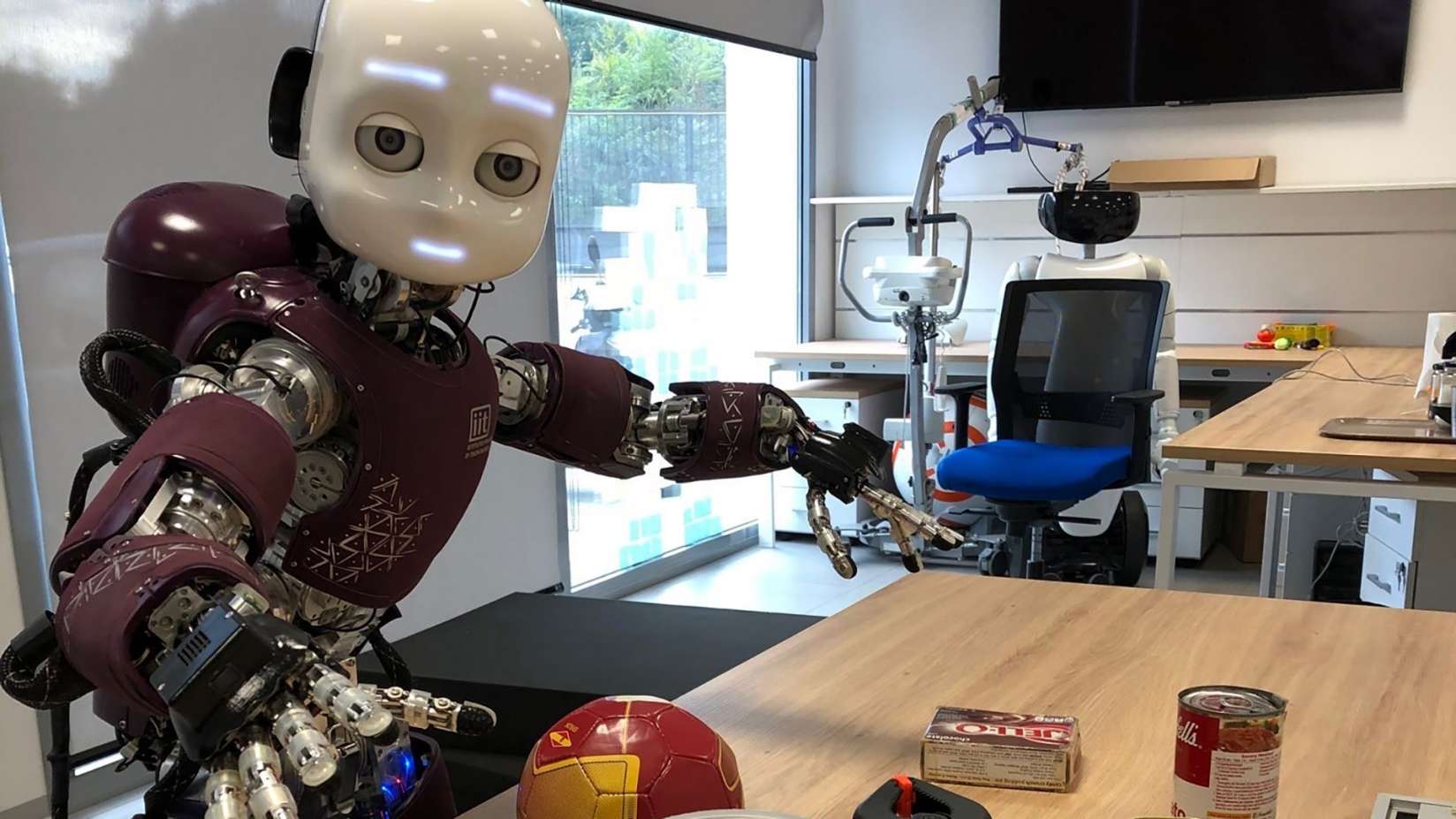

A humanoid robotic geared up with one in all Intel’s experimental neuromorphic chips. Picture Credit: Intel

Neuromorphic techniques try and mimic the organic buildings within the nervous system. Whereas conventional machine studying techniques are both quick or energy environment friendly, neuromorphic techniques obtain each pace and effectivity through the use of nodes to course of info and connections between the nodes to switch electrical alerts utilizing analog circuitry. The techniques can modulate the quantity of energy flowing between the nodes, permitting every node to carry out processing — however solely when required.

Intel and others consider that neuromorphic computing has functions in logistics, for instance powering a robotic constructed to assist with manufacturing processes. It’s theoretical at this level — neuromorphic computing has its downsides — however maybe at some point, that imaginative and prescient will come to cross.

Picture Credit: DeepMind

Nearer to actuality is DeepMind’s latest work in “embodied intelligence,” or utilizing human and animal motions to show robots to dribble a ball, carry packing containers and even play soccer. Researchers on the lab devised a setup to report knowledge from movement trackers worn by people and animals, from which an AI system discovered to deduce find out how to full new actions, like find out how to stroll in a round movement. The researchers declare that this strategy translated effectively to real-world robots, for instance permitting a four-legged robotic to stroll like a canine whereas concurrently dribbling a ball.

Coincidentally, Microsoft earlier this summer season released a library of movement seize knowledge meant to spur analysis into robots that may stroll like people. Referred to as MoCapAct, the library comprises movement seize clips that, when used with different knowledge, can be utilized to create agile bipedal robots — at the very least in simulation.

“[Creating this dataset] has taken the equal of fifty years over many GPU-equipped [servers] … a testomony to the computational hurdle MoCapAct removes for different researchers,” the co-authors of the work wrote in a weblog submit. “We hope the group can construct off of our dataset and work to do unimaginable analysis within the management of humanoid robots.”

Peer overview of scientific papers is invaluable human work, and it’s unlikely AI will take over there, however it could really assist be sure that peer critiques are literally useful. A Swiss analysis group has been taking a look at model-based evaluation of peer reviews, and their early outcomes are combined — in a great way. There wasn’t some apparent good or unhealthy methodology or pattern, and publication affect ranking didn’t appear to foretell whether or not a overview was thorough or useful. That’s okay although, as a result of though high quality of critiques differs, you wouldn’t need there to be a scientific lack of fine overview in all places however main journals, as an example. Their work is ongoing.

Final, for anybody involved about creativity on this area, here’s a personal project by Karen X. Cheng that reveals how a little bit of ingenuity and arduous work will be mixed with AI to provide one thing actually unique.

[ad_2]

Source link